, [-4.262e-01, -9.200e-02, -1.070e-02, 4.920e-02, -2.820e-02, We started with the goal to reduce the dimensionality of our feature space, i.e., projecting the feature space via PCA onto a smaller subspace, where the eigenvectors will form the axes of this new feature subspace.

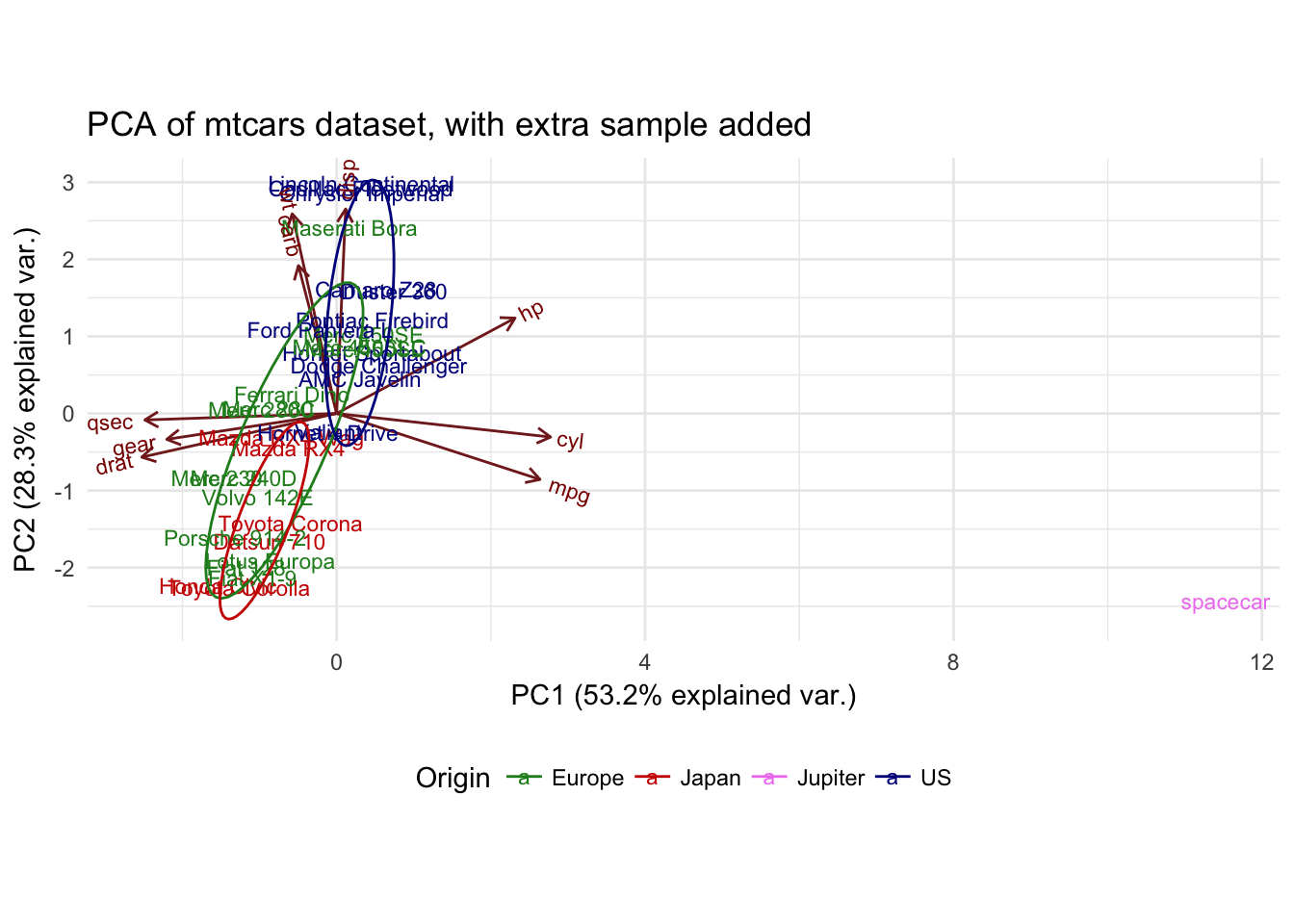

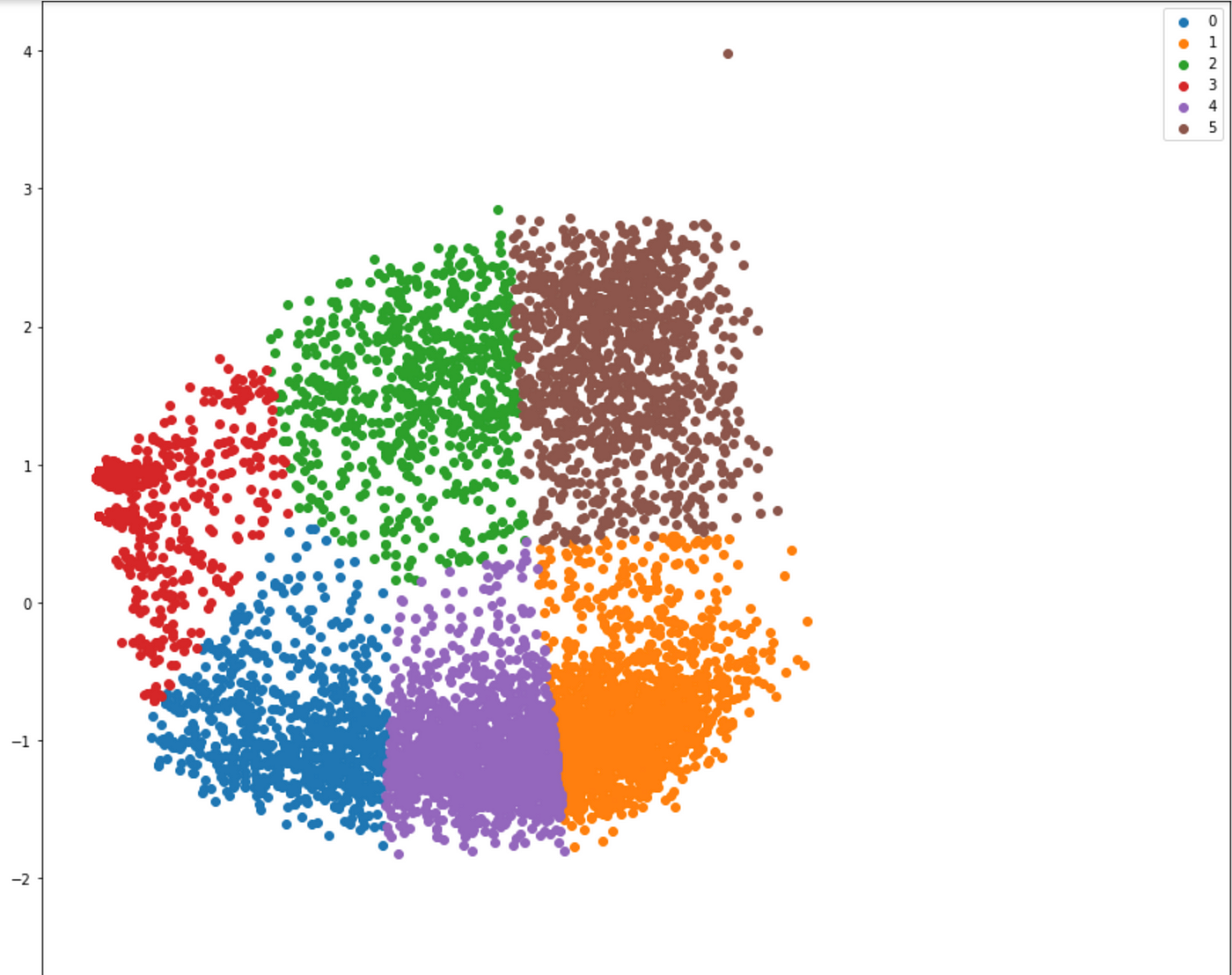

|0.149 | |Īrray(, import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from composition import PCA import pandas as pd from sklearn.preprocessing import StandardScaler iris datasets.loadiris() X iris.data y iris.target In general a good idea is to scale the data scaler StandardScaler() scaler.fit(X) ansform(X) pca PCA() xnew pca.fittransform(X) def myplot(score,coeff,labelsNone): xs score:,0 ys score:,1 n coeff.shape0 scalex 1.0. Data for the supplementary individuals ind.sup - decathlon224:27, 1:10 ind. The new data must contain columns (variables) with the same names and in the same order as the active data used to compute PCA. From composition import PCA Make an instance of the Model pca PCA(.95) Fit PCA on training set. It means that scikit-learn choose the minimum number of principal components such that 95 of the variance is retained. It is important to mention here that when you use PCA for machine learning, the test data should be transformed using the loading vectors found from the training data otherwise your model will have difficulty generalizing to unseen data. In machine learning, on the other hand, by using the PCs, we will work with less number of columns and this can significantly reduce computational cost and help in reducing over-fitting. In data explorations, we can visualize the PCs and get a better understanding of processes using those PCs.

IF new - old > threshold new THEN return to step 1. You don’t want the server to add default value if incomplete insert is sent. Just only need to set some value into new columns in existing rows. The three line script is correct because you don’t want to add default constraint. In such scenarios where we have many columns and the first few PCs account for most of the variability in the original data (such as 95% of the variance), we can use the first few PCs for data explorations and for machine learning. An infrared (IR) spectrum may include several thousands of data points (wave number). Hi, returning back to original article above i think your solution with single line script and ‘default’ constraint is bad.

HOW TO ADD NEW PCA COLUMN BACK TO PC

So, we see that the first PC explains almost all the variance (92.4616%) while the fourth PC explains only 0.5183%. The PCs are ranked based on the variance they explain: the first PC explains the highest variance, followed by the second PC and so on. The variance explained by each PC is shown below.

0 kommentar(er)

0 kommentar(er)